Misaligned Image Integration with Local Linear Model

( IEEE Trans. on Image Processing, Vol.25, Issue 5, pp.2035-2044, May 2016 )

2 Shinshu University

|

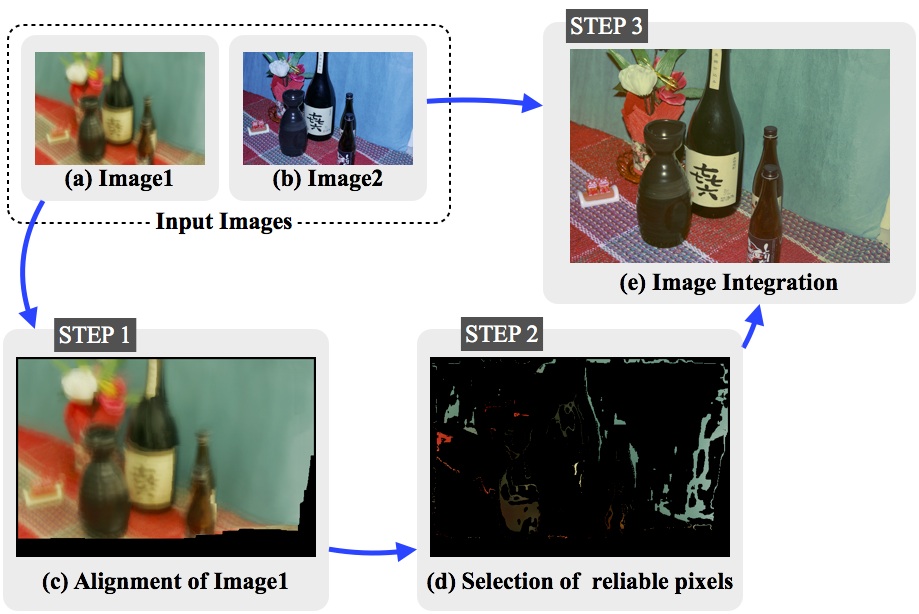

| Overview of our image integration. Image1 (a) is taken with long-exposure time (long-exposure image), while Image2 (b) is taken with camera strobe (flash image). A conventional method for image registration aligns the Image1 to the Image2 (c), and then the aligned pixels are selected by our thresholding procedure. Finally, our method based on local linear model integrates the Image2 (b) and the color of related pixels (d). |

Abstract

We present a new image integration technique for flash and long-exposure image pairs to capture a dark scene without incurring blurring or noisy artifacts. Most existing methods require well-aligned images for the integration, which is often a burdensome restriction for practical use. We address this issue by locally transferring the colors of the flash images using a small fraction of corresponding pixels in the long-exposure images. We formulate image integration as a convex optimization problem with the local linear model. The proposed method makes it possible to integrate the color of the long-exposure image with the detail of the flash image without causing any harmful effects to its contrast, where the images do not need perfect alignment by virtue of our new integration principle. We show our method successfully outperforms the state-of-the-art in image integration and reference based color transfer for challenging misaligned datasets.

Results

| Image Integration of various scenes | |

|---|---|

|

|

| Source image with undesirable colors | Traget image with desirable colors |

| Input images |

|

| |

| Our Image Integration Result |

|

| Click buttons to change a scene | |

Additional results

Results of some applications are shown in the following pages.All results of our image fusion are available here.